Elasticsearch is a real-time distributed and open source full-text search and analytics engine. It is used in Single Page Application (SPA) projects. Elasticsearch is an open source developed in Java and used by many big organizations around the world. It is licensed under the Apache license version 2.0. Download the class files. After downloading the class files, create a folder in. Connect laravel app to elasticsearch through appbase.io 13th April 2021 elasticsearch, laravel, php I am learning elasticsearch, I was able to connect to elasticsearch with docker in my local machine, but there is a service called appbase.io it allows have elasticsearch in the cloud.

.zip on Windows »

Install Elasticsearch from archive on Linux or MacOSedit

Elasticsearch is as a .tar.gz archive for Linux and MacOS.

This package contains both free and subscription features.Start a 30-day trial to try out all of the features.

The latest stable version of Elasticsearch can be found on theDownload Elasticsearch page.Other versions can be found on thePast Releases page.

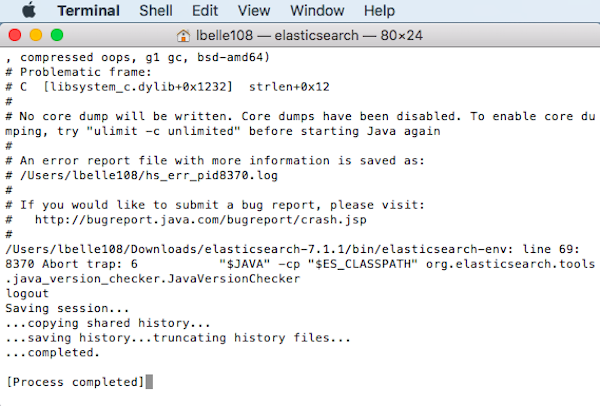

Elasticsearch includes a bundled version of OpenJDKfrom the JDK maintainers (GPLv2+CE). To use your own version of Java,see the JVM version requirements

The Linux archive for Elasticsearch v7.12.0 can be downloaded and installed as follows:

Compares the SHA of the downloaded |

This directory is known as |

The MacOS archive for Elasticsearch v7.12.0 can be downloaded and installed as follows:

Compares the SHA of the downloaded |

This directory is known as |

Some commercial features automatically create indices within Elasticsearch.By default, Elasticsearch is configured to allow automatic index creation, and noadditional steps are required. However, if you have disabled automatic indexcreation in Elasticsearch, you must configureaction.auto_create_index in elasticsearch.yml to allowthe commercial features to create the following indices:

Mamp Elasticsearch Pro

If you are using Logstashor Beats then you will most likelyrequire additional index names in your action.auto_create_index setting, andthe exact value will depend on your local configuration. If you are unsure ofthe correct value for your environment, you may consider setting the value to * which will allow automatic creation of all indices.

Elasticsearch can be started from the command line as follows:

If you have password-protected the Elasticsearch keystore, you will be promptedto enter the keystore’s password. See Secure settings for moredetails.

By default, Elasticsearch runs in the foreground, prints its logs to thestandard output (stdout), and can be stopped by pressing Ctrl-C.

All scripts packaged with Elasticsearch require a version of Bashthat supports arrays and assume that Bash is available at /bin/bash.As such, Bash should be available at this path either directly or via asymbolic link.

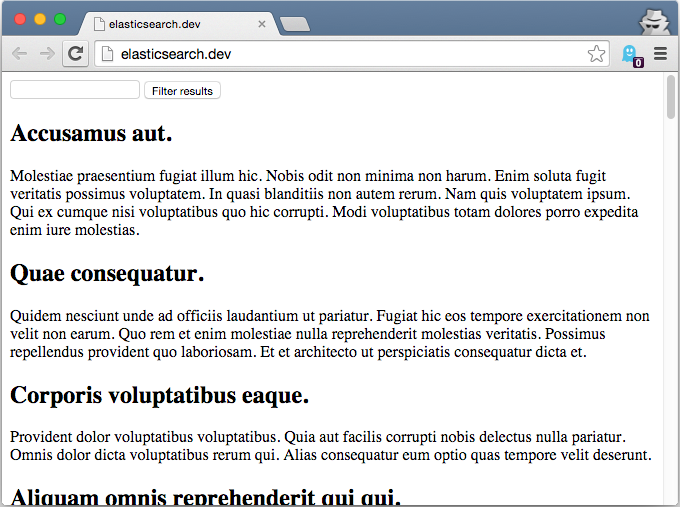

You can test that your Elasticsearch node is running by sending an HTTPrequest to port 9200 on localhost:

which should give you a response something like this:

Log printing to stdout can be disabled using the -q or --quietoption on the command line.

To run Elasticsearch as a daemon, specify -d on the command line, and recordthe process ID in a file using the -p option:

If you have password-protected the Elasticsearch keystore, you will be promptedto enter the keystore’s password. See Secure settings for moredetails.

Log messages can be found in the $ES_HOME/logs/ directory.

To shut down Elasticsearch, kill the process ID recorded in the pid file:

The Elasticsearch .tar.gz package does not include the systemd module. Tomanage Elasticsearch as a service, use the Debian or RPMpackage instead.

Elasticsearch loads its configuration from the $ES_HOME/config/elasticsearch.ymlfile by default. The format of this config file is explained inConfiguring Elasticsearch.

Any settings that can be specified in the config file can also be specified onthe command line, using the -E syntax as follows:

Typically, any cluster-wide settings (like cluster.name) should beadded to the elasticsearch.yml config file, while any node-specific settingssuch as node.name could be specified on the command line.

The archive distributions are entirely self-contained. All files anddirectories are, by default, contained within $ES_HOME — the directorycreated when unpacking the archive.

This is very convenient because you don’t have to create any directories tostart using Elasticsearch, and uninstalling Elasticsearch is as easy asremoving the $ES_HOME directory. However, it is advisable to change thedefault locations of the config directory, the data directory, and the logsdirectory so that you do not delete important data later on.

| Type | Description | Default Location | Setting |

|---|---|---|---|

home | Elasticsearch home directory or | Directory created by unpacking the archive | |

bin | Binary scripts including |

| |

conf | Configuration files including |

| |

data | The location of the data files of each index / shard allocated on the node. Can hold multiple locations. |

|

|

logs | Log files location. |

|

|

plugins | Plugin files location. Each plugin will be contained in a subdirectory. |

| |

repo | Shared file system repository locations. Can hold multiple locations. A file system repository can be placed in to any subdirectory of any directory specified here. | Not configured |

|

You now have a test Elasticsearch environment set up. Before you startserious development or go into production with Elasticsearch, you must do some additionalsetup:

- Learn how to configure Elasticsearch.

- Configure important Elasticsearch settings.

- Configure important system settings.

.zip on Windows »

Most Popular

| Installation guides |

|---|

| FreeBSD |

| GNU/Linux |

| - ALT Linux |

| - Arch Linux |

| - Damnsmalllinux |

| - Debian or Ubuntu |

| - Fedora |

| - Gentoo |

| - Mandriva |

| - Red Hat Enterprise Linux or CentOS |

| - Slackware |

| macOS |

| NetWare |

| Solaris |

| - Solaris 11 / opensolaris |

| - Solaris 10 |

| Windows |

| - Windows Server 2019 |

| - Windows Server 2016 |

| - Windows Subsystem for Linux |

| on a stick |

| - Uniform Server |

| - XAMPP |

| Sourceforge.net |

The primary development and deployment environment for MediaWiki is on Linux and Unix systems; Mac OS X is Unix under the hood, so it's fairly straightforward to run it.

Get requirements[edit]

Instead of setting the required software up separately you might be better off to start with MAMPstack + MediaWiki, which gives you Apache, MySQL, PHP & MediaWiki in one convenient package.

Or use XAMPP Application to get easier Installation and highly recommended for beginners, go here instead.

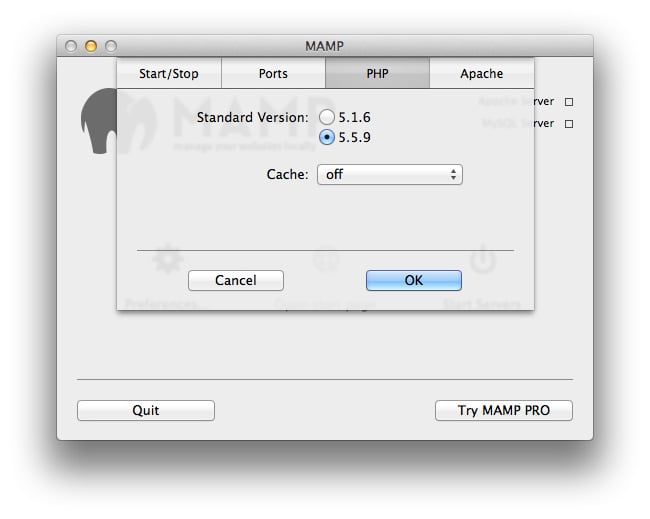

MAMP[edit]

For a personal Wiki environment, you may find it easier to install MAMP, if you are using Mac OS X 10.4 or newer (MAMP will not run on Mac OS X 10.3 or older). This installer will provide its own Apache, MySQL and PHP (with eAccelerator (an updated MMCache) and Zend Optimizer) and a nice simple control panel, running under your login (in other words, this really isn't configured to work as a production server, so don't do that). If you turn off the Mac's built-in personal web server, you can run MAMP's Apache on port 80.

You will still need to install ImageMagick, but otherwise everything MediaWiki needs will be there. Note that with MAMP your personal Web directory defaults to /Applications/MAMP/htdocs instead of the Mac's usual /Users/yourname/Sites. If you don't want to store your own data files in the Applications directory, open MAMP's Preferences, select the Apache tab, and change the document root to the directory of your choice. /Users/yourname/Sites is an excellent choice. Clicking on MAMP's Open Start page button will open http://localhost:8888/MAMP/ in your browser to show you how things are configured.

Map Elasticsearch Index Python

Install MediaWiki[edit]

TeX support[edit]

Mamp Install Elasticsearch

See Manual talk:Running MediaWiki on Mac OS X#Mathematics for explicit instructions on how to add TeX support for MacOS X.

Homebrew Setup[edit]

Prerequisites[edit]

- Homebrew installed

- Gerrit account set up

- If MediaWiki was previously set up with Docker:

- Create a new directory to clone MediaWiki into (allowing for parallel setups so that each can have its own

LocalSettings.php) - Stop the container (both this and Docker setups run on 8080 port so there could only be one running at any given time)

- Create a new directory to clone MediaWiki into (allowing for parallel setups so that each can have its own

Steps[edit]

- Create empty

mediawikidirectory, download MediaWiki from Git intowfolder inside mediawiki folder - Install Composer 1.x (use wget rather than brew for this since the latter installs 2.x and MediaWiki only works with 1.x). After this step,

composershould be accessible. - Update MediaWiki dependencies

- Set up PHP, Apache, MariaDB, Redis, ElasticSearch (Docker) locally and update Apache config per https://www.kostaharlan.net/posts/mediawiki-homebrew-php/

- Start services

- Make sure all services are running (by running

brew servicesanddocker psfor ElasticSearch) & navigate tolocalhost:8080/wiki/index.php - Go through MediaWiki installation steps (installation link is at the bottom of the page), at the end of the process, save

LocalSettings.phpto the project directory (mediawiki/w) - After these steps, there should be a barebone MediaWiki installation (no skins/styling/data) at

localhost:8080.

Mamp Elasticsearch Service

Setting up XDebug with PhpStorm[edit]

- Install Xdebug via

pecl - Add

xdebug.mode=debugandxdebug.client_port=9000tophp.ini(check the config file path by runningphp --ini) - Verify Xdebug shows up when running

php --version - Restart Apache & PHP

- In PhpStorm: Preferences > Languages & Frameworks > PHP > CLI interpreter > PHP executable > Select Homebrew PHP path (for example

/usr/local/Cellar/php@7.4/7.4.15/bin/php), make sure debug port includes9000 - Install Xdebug browser extension

- In PhpStorm: Listen for PHP Debug Connections (phone icon on top right)

- Verify setup in PhpStorm: PhpStorm > Run > Web Server Debug Validation > Enter

http://localhost:8080/w/for “Url to validation script”

composer can get stuck at a breakpoint in PhpStorm after enabling Xdebug this way. Check the PhpStorm debug console if composer commands are taking a long time!